The Metropolitan Police is embroiled in a significant High Court legal battle concerning its deployment of live facial recognition (LFR) cameras, following an incident where an anti-knife crime campaigner was erroneously identified as a suspect. This case highlights growing tensions between law enforcement's use of advanced surveillance technology and fundamental civil liberties.

Wrongful Identification Sparks Legal Action

Shaun Thompson, a 39-year-old respected black community worker, was incorrectly flagged as a criminal after being filmed at London Bridge station in February 2024. Mr Thompson, who was returning home to Croydon after a voluntary anti-knife crime shift, was detained for approximately 30 minutes under threat of arrest. He claims officers demanded identity documents, fingerprint scans, and inspected him for scars and tattoos in an attempt to confirm he was the suspect, despite providing identification proving he had been falsely identified.

Mr Thompson described the police's use of live facial recognition technology as 'stop and search on steroids', emphasising the intrusive nature of the encounter. The civil liberties campaign group Big Brother Watch is bringing the High Court case on his behalf, arguing that the incident violated his privacy rights under Article 8 of the European Convention on Human Rights (ECHR).

How Live Facial Recognition Technology Works

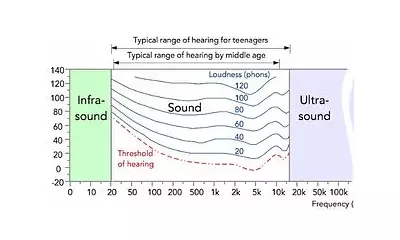

Live facial recognition allows police to identify wanted individuals among crowds in real-time. Cameras, which resemble standard CCTV, capture digital images of passing pedestrians. Biometric software then analyses facial features, comparing them against a watchlist of wanted criminals, individuals banned from areas, or those posing public risks. If a match is detected, an alert is sent to officers; if not, biometric data is immediately deleted. The Metropolitan Police states the technology has had just 10 false alerts from three million images, a rate of 0.0003%.

Legal Arguments and Civil Liberties Concerns

Big Brother Watch contends that the deployment of LFR cameras is excessively permissive, breaching Article 8 of the ECHR and operating without proper legal constraints. The group argues that the definition of 'crime hotspots' is so broad that most public spaces in London could be subject to surveillance, creating 'no meaningful constraint' on its use across the capital.

Silkie Carlo, Director of Big Brother Watch, stated: 'The possibility of being subjected to a digital identity check by police without our consent almost anywhere, at any time, is a serious infringement on our civil liberties that is transforming London. When used as a mass surveillance tool, LFR reverses the presumption of innocence and destroys any notion of privacy in our capital.' The legal challenge also alleges breaches of rights to freedom of expression and assembly under Articles 10 and 11 of the ECHR, claiming the technology has a chilling effect on protest.

Government Support and Planned Expansion

Home Secretary Shabana Mahmood has defended plans to roll out live facial recognition to all 43 police forces in England and Wales, announcing that the number of LFR vans will triple, with 50 vans available to each force. She told LBC: 'Of course, it has to be used in a way that is in line with our values, doesn’t lead to innocent people being caught up in cases they shouldn’t have been involved in. But this technology is what is working. It’s already led to 1,700 arrests in the Met alone. I think it’s got huge potential.'

The government asserts the technology will be governed by data protection, equality, and human rights laws, with faces flagged by the system reviewed by officers before action. Currently, it can only search for watchlists of wanted criminals, suspects, or individuals subject to bail or court orders.

Criticism and Calls for Regulation

Despite police assurances, rights groups express deep concerns. Matthew Feeney, Advocacy Manager at Big Brother Watch, commented: 'An expansion of facial recognition on this scale would be unprecedented in liberal democracies, and would represent the latest in a regrettable trend. Police across the UK have already scanned the faces of millions of innocent people who have done nothing except go about their days on high streets across the country.'

The government has yet to complete its facial recognition consultation, which would establish a legal framework for deployment. A Metropolitan Police spokesperson said: 'We have stringent safeguards in place to protect people’s rights and privacy. Independent testing confirms that the technology performs consistently across demographic groups, and our operational data shows an exceptionally low false alert rate. We are committed to providing clear reassurance that rigorous checks, oversight, and governance are embedded at every stage.'

This High Court case marks a pivotal moment in the debate over surveillance technology, balancing crime prevention against the protection of individual freedoms in an increasingly monitored society.