Artificial intelligence chatbots are significantly more inclined to source their responses to news-related queries from Left-wing media outlets such as the Guardian and the BBC, according to a comprehensive new analysis from a leading think tank. The Institute for Public Policy Research (IPPR) has published findings today indicating that the popular tools utilised by millions are drawing upon what it describes as a 'narrow and inconsistent' range of sources, potentially skewing the information landscape.

Dominance of Left-Leaning Outlets in AI Responses

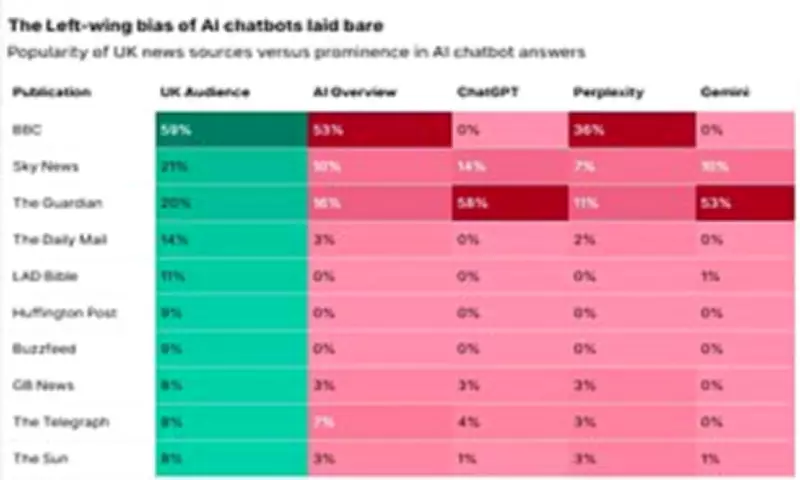

The detailed analysis conducted by the IPPR uncovered a pronounced pattern in how these AI systems select their reference material. The research found that the BBC emerged as the most frequently cited source within Google AI Overview answers, featuring in a substantial 52.5% of responses. It also held the position of market leader for the AI tool Perplexity, appearing in 36% of its answers.

Meanwhile, the Guardian secured the top spot for both ChatGPT and Google Gemini, being referenced in 58% and 53% of their respective answers. This dominance stands in stark contrast to the representation of Right-leaning publications. The Daily Mail, alongside other outlets with a more conservative editorial stance, appeared in only a small fraction of the AI-generated answers. This marginalisation occurs despite these publications collectively commanding a significant share of the UK media audience.

Concerns Over Editorialisation and Transparency

The think tank's findings have sparked serious concerns that this selective sourcing by AI companies is effectively creating a new generation of 'winners and losers' within the media ecosystem. IPPR experts have issued a stark warning that this source selection process risks dangerously narrowing the spectrum of perspectives to which users are exposed. This could have the unintended consequence of amplifying specific viewpoints or political agendas without user awareness.

Roa Powell, a senior research fellow at the IPPR, emphasised the gravity of the situation, stating: 'AI tools are rapidly becoming the front door to news, but right now that door is being controlled by a handful of tech companies with little transparency or accountability.' The problem is compounded by the opaque operational rules governing these AI systems. The murky guidelines mean that AI tools may pay for and subsequently prioritise certain outlets, exploit other content without compensation, or even exclude sources that actively block AI access to their material.

This complex web of access is illustrated by the differing strategies of major publishers. Some, like the Guardian, have entered into formal licensing agreements with firms such as OpenAI, the creator of ChatGPT. In contrast, others, including the BBC, have attempted to block AI companies from freely accessing their content. A critical issue identified by the researchers is that AI tools consistently fail to inform users about the rationale behind their sourcing decisions. This lack of disclosure means users are often completely unaware of the editorial filtering occurring behind the scenes.

Threats to Journalism's Financial Sustainability

Beyond concerns over bias, researchers argue that the unchecked rise of AI could have severe consequences for the financial sustainability of quality journalism. The IPPR analysis highlights a direct economic threat: when a Google AI Overview is presented in response to a query, Google users become almost half as likely to click through to the original news publishers' websites. This dramatic reduction in traffic poses a direct threat to vital advertising and subscription revenues that fund newsrooms.

Owen Meredith, chief executive of the News Media Association, commented on the report's implications: 'As the Report demonstrates, weakening UK copyright law would deprive publishers of reward and payment for the trusted journalism that enables AI to be accurate and up to date.' He called for regulatory intervention, adding: 'The CMA must intervene swiftly to stop Google using its dominant position to force publishers to fuel its AI chatbots for free. Fair payment from the market leader is critical to a functioning licensing market and to preventing big tech incumbents from monopolising AI.'

Calls for Proactive Government Policy

The report concludes with a powerful call for deliberate policy action to steer AI development towards more socially beneficial outcomes. Carsten Jung, associate director for economic policy and AI at the IPPR, stated: 'So far, much of AI policy has sought to accelerate AI development. But we are coming to a stage where we need to more deliberately steer AI policy towards socially beneficial outcomes.'

He argued that in the news domain, the tools exist to ensure AI enhances rather than damages the public sphere, improving both the quality and diversity of information people access. However, he stressed this positive outcome is not inevitable, noting: 'But this won't happen by itself – the government needs to shape it. We should learn the lessons from the past and shape emerging technologies before it is too late.' The think tank's work underscores an urgent need for transparency, fair compensation, and regulatory frameworks to govern how AI systems interact with and represent the news media.