Artificial intelligence has generated a controversial ranking of the UK's most and least racist towns and cities, but the academics behind the study insist it reveals more about the AI's biases than about the places themselves. Researchers from the University of Oxford conducted a massive analysis of ChatGPT, asking the popular chatbot a staggering 20.3 million questions to uncover how it represents locations globally.

The AI's Controversial UK Ranking

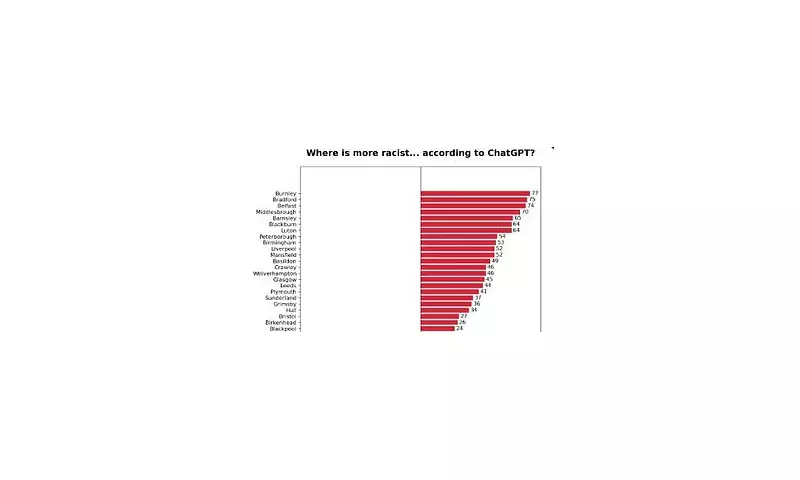

When specifically prompted to identify the most racist towns and cities in the United Kingdom, ChatGPT placed Burnley at the top of the list. It was closely followed by Bradford, Belfast, Middlesbrough, Barnsley, and Blackburn. Further down the AI-generated ranking came Luton, Peterborough, Birmingham, Liverpool, and Mansfield.

At the opposite end of the spectrum, the chatbot presented Paignton in Devon as the least racist town in the country. It was joined by Swansea, Farnborough, Cheltenham, Reading, Cardiff, Eastbourne, and Milton Keynes in being characterised by the AI as places with low racial prejudice.

A Map of Reputation, Not Reality

The lead author of the study, Professor Mark Graham from the University of Oxford, was quick to provide a crucial disclaimer in conversation with the Daily Mail. "ChatGPT is not measuring racism in the real world," he stated emphatically. "It is not checking official figures, speaking to residents, or weighing up local context. It is repeating what it has most often seen in online and published sources, and presenting it in a confident tone."

Professor Graham explained that the results should be interpreted as "a map of reputation in the model's training material." He elaborated: "If a place has been written about more often in connection with words and stories about racism, sectarianism, tensions, conflict, prejudice, far-right activity, riots, or discrimination, the model is more likely to echo that connection."

The Growing Influence and Risk of AI Bias

The research, published in the journal Platforms & Society, comes at a time when AI tools like ChatGPT have moved from niche concepts to daily utilities. The study notes that by 2025, over half of all adults in the US reported using large language models, with global use expanding rapidly in both scope and scale.

This widespread adoption raises significant concerns. "We need to make sure that we understand that bias is a structural feature of AI because they inherit centuries of uneven documentation and representation, then re-project those asymmetries back onto the world with an authoritative tone," Professor Graham warned. He stressed that ChatGPT is not an accurate mirror of the world but rather "reflects and repeats the enormous biases within its training data."

The researchers expressed a profound worry that as more people rely on AI for information, these embedded biases could become self-perpetuating. "They will enter all of the new content created by AI, and will shape how billions of people learn about the world," the study cautions. "The biases therefore become lodged into our collective human consciousness."

The ultimate aim of the Oxford team is to foster a healthy scepticism among AI users. They hope the findings will encourage the public to critically question the responses generated by tools like ChatGPT, rather than accepting them as objective truth. The study serves as a stark reminder that an AI's confident answer is often a reflection of pre-existing patterns in the data it was fed, not a definitive assessment of complex social realities.