White House AI Imagery Triggers Alarm Over Misinformation and Eroding Public Trust

The Trump administration's strategic deployment of AI-generated and digitally altered imagery on official White House social media channels has ignited significant concern among misinformation experts and media literacy researchers. This controversial approach, which includes sharing cartoon-like visuals, memes, and realistically edited photographs, is seen as a deliberate tactic to engage with the President's online base. However, critics argue it fundamentally undermines public trust in governmental institutions and exacerbates existing crises of information authenticity.

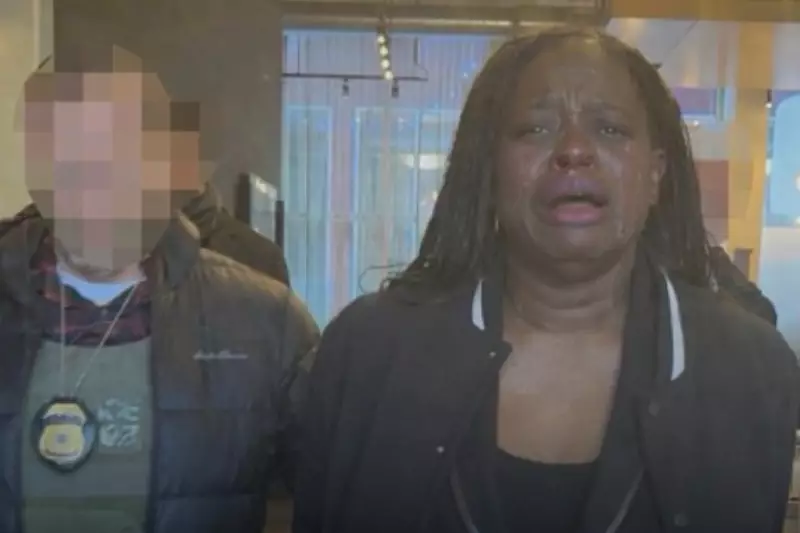

Doctored Image of Civil Rights Attorney Sparks Fresh Controversy

A particularly alarming incident involved a realistically edited image of civil rights attorney Nekima Levy Armstrong, depicting her in tears following her arrest. The original arrest photograph was first posted by Homeland Security Secretary Kristi Noem's account, before the official White House account shared the manipulated version. This doctored picture emerged amidst a deluge of AI-edited political imagery circulated after the fatal shootings of Renee Good and Alex Pretti by U.S. Border Patrol agents in Minneapolis.

In response to criticism over the altered image of Levy Armstrong, White House officials defiantly reinforced their stance. Deputy communications director Kaelan Dorr stated on the platform X that "the memes will continue," while Deputy Press Secretary Abigail Jackson shared posts mocking the detractors. This defensive posture has only intensified scrutiny from academic circles.

Experts Decry Erosion of Institutional Credibility

David Rand, a professor of information science at Cornell University, suggests that labelling the altered arrest image as a meme appears to be a strategic attempt to frame it as humorous or trivial, thereby shielding the administration from criticism for disseminating manipulated media. He notes that the purpose behind sharing this particular image seems "much more ambiguous" compared to the administration's previous cartoonish posts.

Michael A. Spikes, a professor at Northwestern University and news media literacy researcher, expressed profound concern. "The government should be a place where you can trust the information, where you can say it's accurate, because they have a responsibility to do so," Spikes argued. "By sharing this kind of content, and creating this kind of content... it is eroding the trust we should have in our federal government to give us accurate, verified information. It's a real loss, and it really worries me a lot." He fears this behaviour from official channels inflames existing "institutional crises" surrounding distrust in news organisations and higher education.

Strategic Engagement or Dangerous Precedent?

According to Zach Henry, a Republican communications consultant and founder of the influencer marketing firm Total Virality, AI-enhanced imagery is merely the latest tool used to captivate the segment of Trump's base that is perpetually online. "People who are terminally online will see it and instantly recognize it as a meme," Henry explained. "Your grandparents may see it and not understand the meme, but because it looks real, it leads them to ask their kids or grandkids about it." He added that provoking a fierce reaction is advantageous, as it helps content achieve viral status.

Ramesh Srinivasan, a professor at UCLA and host of the Utopias podcast, warned that AI systems are poised to "exacerbate, amplify and accelerate" problems related to an absence of trust and a blurred understanding of reality. He contends that when the White House and other officials share AI-generated content, it not only encourages everyday users to post similar material but also grants implicit permission to other credible figures, such as policymakers, to disseminate unlabelled synthetic content.

Proliferation of Fabricated Immigration Content

The issue extends beyond isolated imagery. An influx of AI-generated videos concerning Immigration and Customs Enforcement (ICE) actions, protests, and citizen interactions has proliferated across social platforms. Following the shooting of Renee Good by an ICE officer, several fabricated videos surfaced showing women driving away from officers. Numerous other falsified clips depict immigration raids and confrontations with ICE personnel.

Jeremy Carrasco, a content creator specialising in media literacy and debunking viral AI videos, suggests many such videos originate from accounts "engagement farming"—capitalising on clicks by using popular keywords like ICE. However, he also observes that viewers opposing ICE and the Department of Homeland Security might watch these videos as a form of "fan fiction" or "wishful thinking," hoping they represent genuine resistance. Carrasco emphasises that most viewers cannot discern authenticity, raising critical questions about their ability to judge reality in higher-stakes situations.

A Future Fraught with Digital Deception

Even when AI generation leaves blatant clues, such as nonsensical street signs, only in the "best-case scenario" would a viewer be sufficiently attentive or knowledgeable to identify the manipulation. This challenge is not confined to immigration-related news; fabricated imagery following the capture of deposed Venezuelan leader Nicolás Maduro also spread widely earlier this month.

Carrasco believes the dissemination of AI-generated political content will only become more commonplace. He views the widespread adoption of a watermarking system, like that developed by the Coalition for Content Provenance and Authenticity, which embeds origin information into media metadata, as a potential step toward a solution. However, he doubts such measures will see extensive implementation for at least another year.

"It's going to be an issue forever now," Carrasco concluded grimly. "I don't think people understand how bad this is." The intersection of political strategy, advanced technology, and public information integrity presents a formidable and enduring challenge for democratic societies.