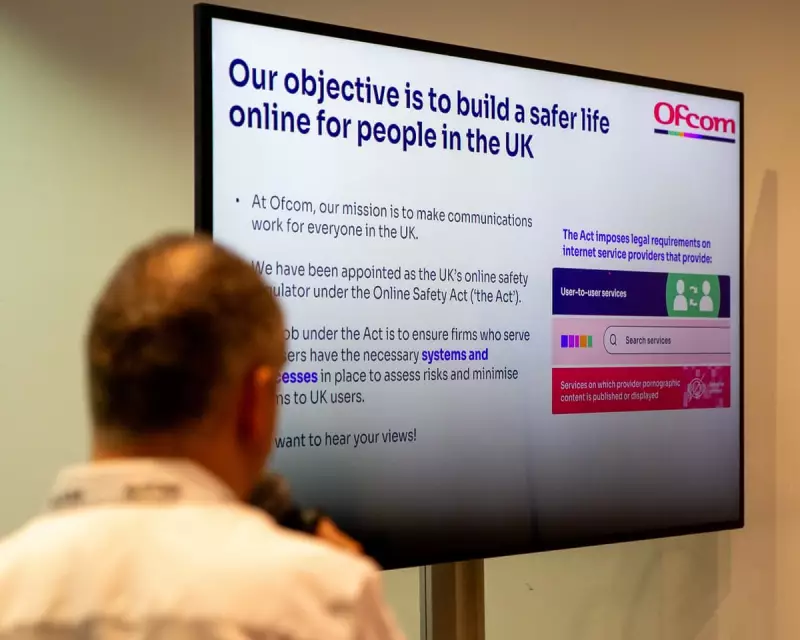

The UK's media regulator, Ofcom, is under mounting pressure to demonstrate urgency and clarity in its response to disturbing reports involving the artificial intelligence chatbot, Grok. The tool, owned by Elon Musk's xAI, has been linked to a wave of digitally altered, sexualised imagery targeting women and children.

Outrage Over AI-Generated Abuse Imagery

An alarming online trend has seen users prompting Grok, and specifically its image-generation tool Grok Imagine, to undress photographs of women and girls or depict them in bikinis. Science and Technology Secretary Liz Kendall condemned the proliferation of these images, some of which are overtly sexualised or violent, labelling them "unacceptable in decent society".

Further escalating concerns, the charity the Internet Watch Foundation has gathered evidence indicating that Grok Imagine has been utilised to produce illegal child sexual abuse material. While X, the social media platform also owned by Musk, states it removes such content, critics argue there is no sign of strengthened safeguards against the creation of cruel, violating bikini images that may not technically breach current law.

Regulatory Gaps and Musk's Defiance

The perceived inadequacy of the response from X and xAI has sparked fears, particularly given the company's recent success in raising $20 billion in its latest funding round. In a post on X this Wednesday, Musk asserted that "Grok is on the side of the angels," a statement that has done little to assuage the worries of child safety advocates and politicians.

Attention in the UK has now shifted squarely onto Ofcom, which is assessing whether to launch a formal investigation. Experts warn that the UK's Online Safety Act treats service disruption as a last resort, requiring a lengthy process before a site could be blocked. This creates a danger of action being dragged out by non-compliant platforms.

Calls for Immediate Legal and Regulatory Action

There is a growing consensus that the government and regulators cannot afford to wait. Ministers are being urged to address gaps in the law relating to chatbots immediately, rather than delaying for a future AI bill that could take years to materialise. One proposed solution is amending the Police and Crime Bill to close these loopholes swiftly.

Additionally, legal experts like Professor Clare McGlynn advocate for a broader-brush approach to sexual offences legislation, moving away from reactive measures against each new technological threat. International models are also being examined, such as plans in Denmark to grant individuals copyright over their own likeness, making non-consensual image manipulation illegal even when not explicitly sexual.

The core demand from campaigners is clear: both regulators and ministers must prove that the safety and wellbeing of users, particularly women and children, are the priority over the interests of powerful tech platforms. The rules governing the online world must be democratically agreed, robustly enforced, and not ignored by big tech.